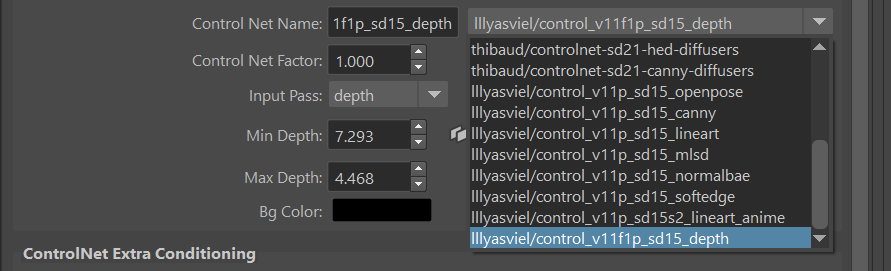

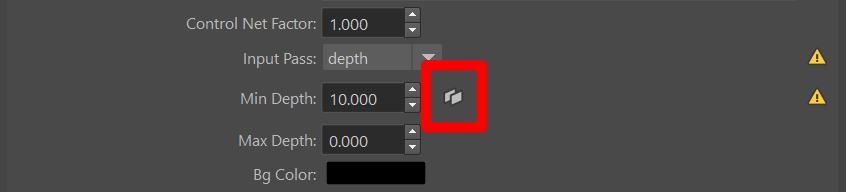

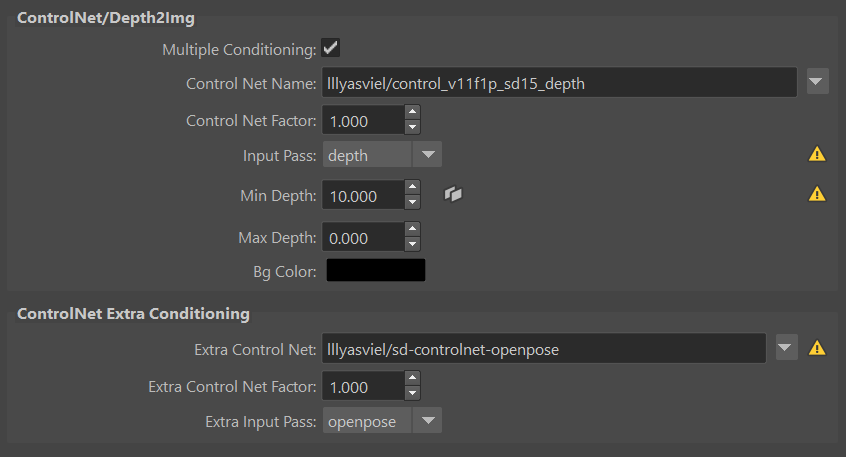

Generates an image based on a prompt, and a variety of passes. Up to two controlNet inputs are supported at the same time via the extraControlNet attributes.

Example #

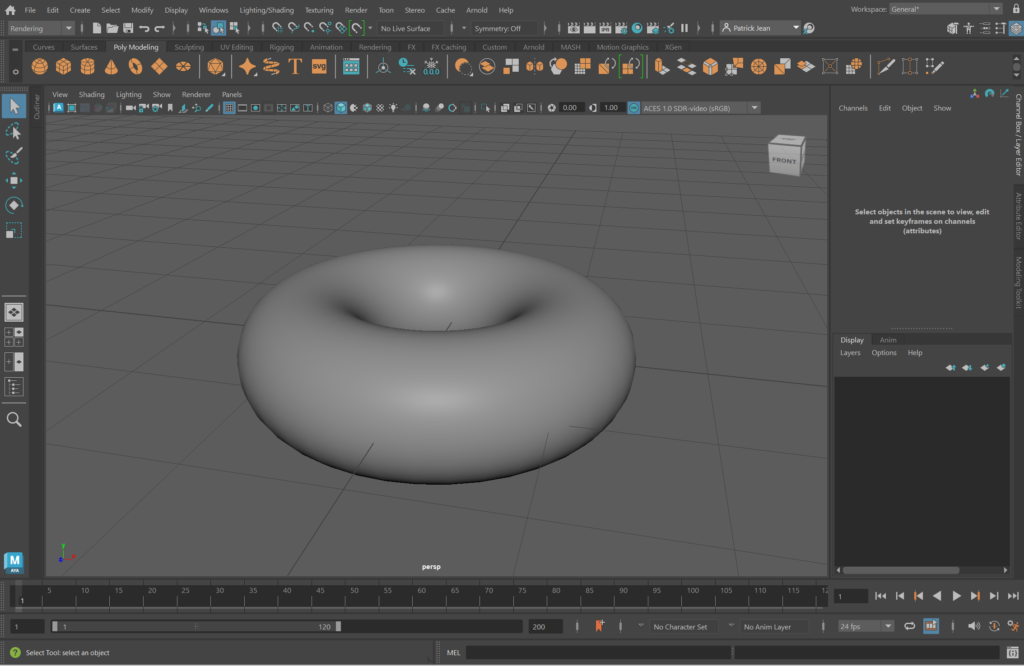

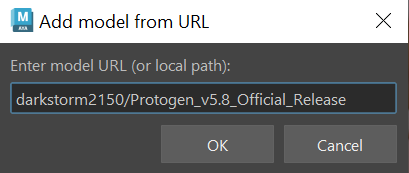

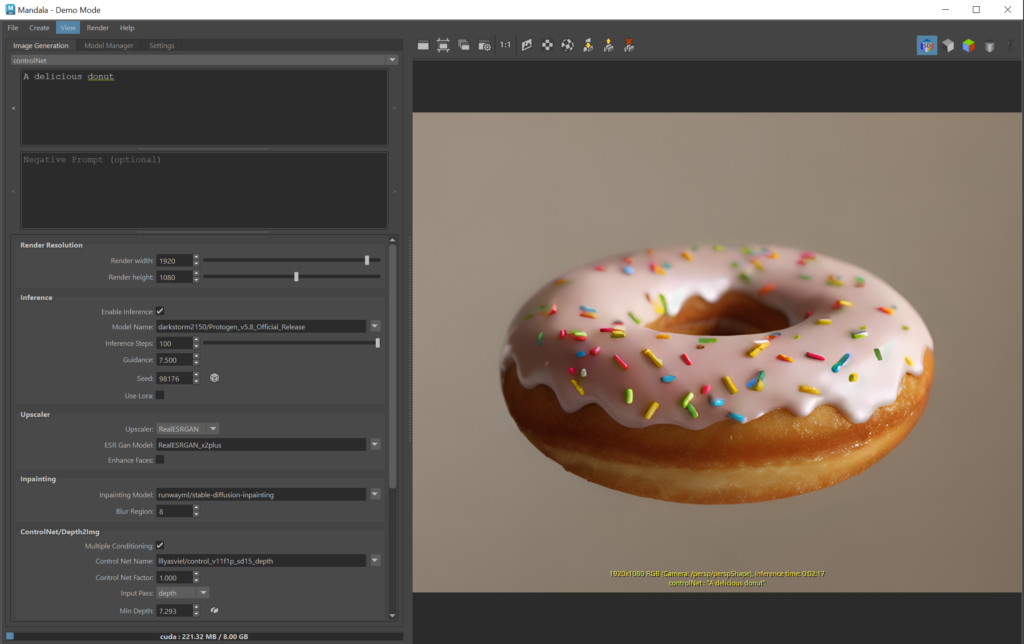

As a simple example, let’s make a delicious donut:

|

|

|

|

A delicious donut |

! That’s it! As you can see on the top left, various passes have been rendered. These passes are used as an input by the controlNet. Try clicking on the passes icons to check them out. ! That’s it! As you can see on the top left, various passes have been rendered. These passes are used as an input by the controlNet. Try clicking on the passes icons to check them out. |

Parameters #

For the other parameters, refer to the diffusion page.