Generates an image based on your prompt + negative prompt. No inputs are used in this mode, and nothing in actual 3D is actually rendered. You could use this mode, for instance, to generate a background landscape for your scene.

Example #

So, let’s do just that. Let’s generate a Ghibli-style background:

|

|

|

|

|

A fantasy open landscape, lush vegetation, Studio Ghibli background, colorful scenery, cliffs, waterfall, flowers, butterflies |

|

|

|

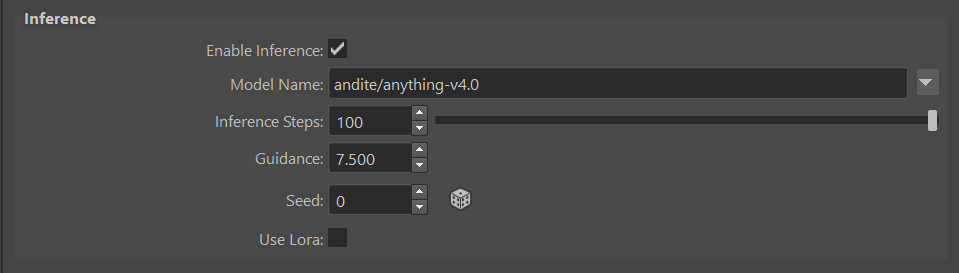

Parameters #

Generates an image based on your prompt + negative prompt, and the input RGB pass.

prompt, usually at the expense of lower image quality. |