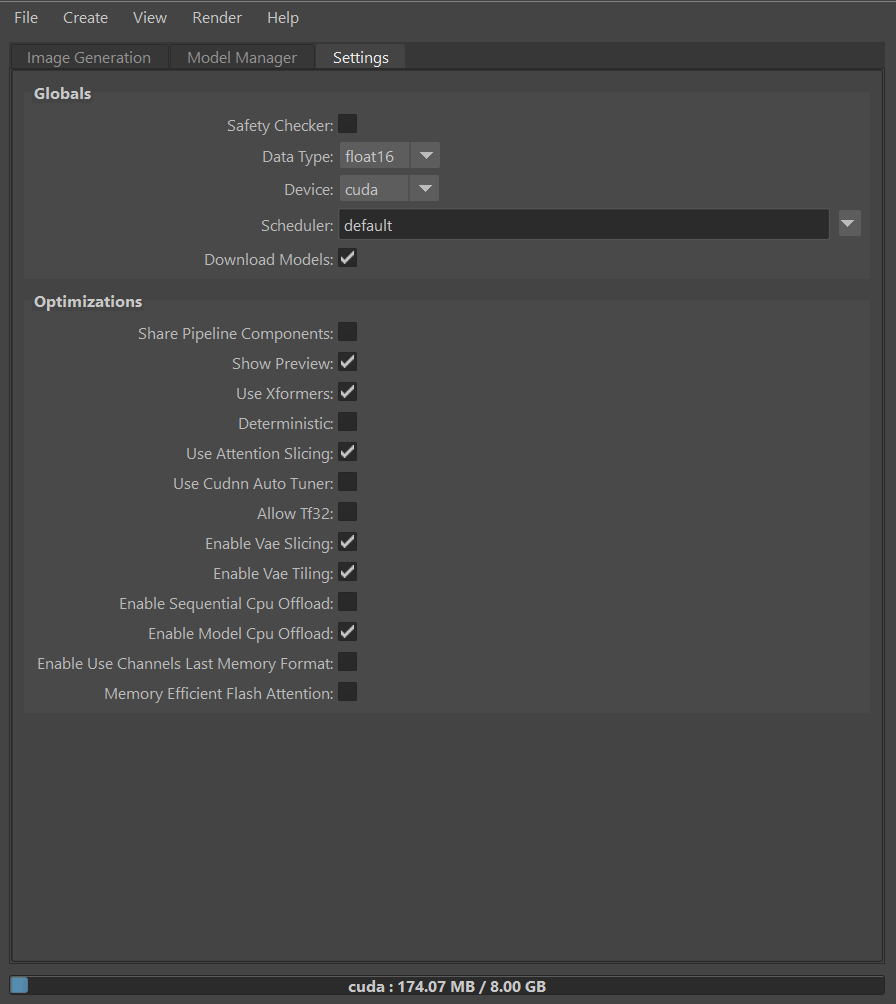

Globals #

safetyChecker: Enable safety checks to prevent NSFW or harmful content. safetyChecker: Enable safety checks to prevent NSFW or harmful content. |

dataType: If you are limited by GPU memory and have less than 10GB of GPU RAM available, use float16 precision instead of float32 precision. dataType: If you are limited by GPU memory and have less than 10GB of GPU RAM available, use float16 precision instead of float32 precision. |

device: The device to use for inference. CPU can be really, really slow, so ideally this should always be GPU. At least a 30XX NVidia GPU with >=8GB of Ram is recommended. device: The device to use for inference. CPU can be really, really slow, so ideally this should always be GPU. At least a 30XX NVidia GPU with >=8GB of Ram is recommended. |

scheduler: The schedule function has a big impact on the generation process. Schedulers may also be called Samplers in other diffusion models implementations. Leaving this to default will automatically choose the best scheduler for the selected model. scheduler: The schedule function has a big impact on the generation process. Schedulers may also be called Samplers in other diffusion models implementations. Leaving this to default will automatically choose the best scheduler for the selected model. |

downloadModels: Download the models automatically, either using the HuggingFace hub or the URL entered in the model manager tab. This can take time especially during the first inference. downloadModels: Download the models automatically, either using the HuggingFace hub or the URL entered in the model manager tab. This can take time especially during the first inference. |

Optimizations #

sharePipelineComponents: Experimental: Share pipeline components such as unet, tokenizer or vae, potentially making loading models faster. sharePipelineComponents: Experimental: Share pipeline components such as unet, tokenizer or vae, potentially making loading models faster. |

showPreview: show a preview of the image during inference. This might slow down the process a little bit, so you might want to disable it during batch rendering. showPreview: show a preview of the image during inference. This might slow down the process a little bit, so you might want to disable it during batch rendering. |

useXformers : The use of xFormers for both inference and training. The optimizations performed in the attention blocks allow for both faster speed and reduced memory consumption. useXformers : The use of xFormers for both inference and training. The optimizations performed in the attention blocks allow for both faster speed and reduced memory consumption. |

useAttentionSlicing: Enable sliced attention computation. When this option is enabled, the attention module will split the input tensor in slices, to compute attention in several steps. This is useful to save some memory in exchange for a small speed decrease. useAttentionSlicing: Enable sliced attention computation. When this option is enabled, the attention module will split the input tensor in slices, to compute attention in several steps. This is useful to save some memory in exchange for a small speed decrease. |

useCudnnAutoTuner : NVIDIA cuDNN supports many algorithms to compute a convolution. Autotuner runs a short benchmark and selects the kernel with the best performance on a given hardware for a given input size. useCudnnAutoTuner : NVIDIA cuDNN supports many algorithms to compute a convolution. Autotuner runs a short benchmark and selects the kernel with the best performance on a given hardware for a given input size. |

allowTf32: TensorFloat32 (TF32) is a math mode introduced with NVIDIA’s Ampere GPUs. When enabled, it computes float32 GEMMs faster but with reduced numerical accuracy. For many programs, this results in a significant speedup and negligible accuracy impact, but for some programs, there is a noticeable and significant effect from the reduced accuracy. allowTf32: TensorFloat32 (TF32) is a math mode introduced with NVIDIA’s Ampere GPUs. When enabled, it computes float32 GEMMs faster but with reduced numerical accuracy. For many programs, this results in a significant speedup and negligible accuracy impact, but for some programs, there is a noticeable and significant effect from the reduced accuracy. |

enableVaeSlicing: When this option is enabled, the VAE will split the input tensor in slices to compute decoding in several steps. This is useful to save some memory and allow larger batch sizes. enableVaeSlicing: When this option is enabled, the VAE will split the input tensor in slices to compute decoding in several steps. This is useful to save some memory and allow larger batch sizes. |

enableVaeTiling: When this option is enabled, the VAE will split the input tensor into tiles to compute decoding and encoding in several steps. This is useful to save a large amount of memory and to allow the processing of larger images. enableVaeTiling: When this option is enabled, the VAE will split the input tensor into tiles to compute decoding and encoding in several steps. This is useful to save a large amount of memory and to allow the processing of larger images. |

enableSequentialCpuOffload: Offloads all models to CPU using accelerate, significantly reducing memory usage. When called, unet, text_encoder, vae and safety checker have their state dicts saved to the CPU and then are moved to a torch. device(‘meta’) and loaded to GPU only when their specific submodule has its forward method called. Note that offloading happens on a submodule basis. Memory savings are higher than with “enable_model_cpu_offload”, but performance is lower. enableSequentialCpuOffload: Offloads all models to CPU using accelerate, significantly reducing memory usage. When called, unet, text_encoder, vae and safety checker have their state dicts saved to the CPU and then are moved to a torch. device(‘meta’) and loaded to GPU only when their specific submodule has its forward method called. Note that offloading happens on a submodule basis. Memory savings are higher than with “enable_model_cpu_offload”, but performance is lower. |

enableModelCpuOffload: Offloads all models to CPU using accelerate, reducing memory usage with a low impact on performance. Compared to enable_sequential_cpu_offload, this method moves one whole model at a time to the GPU when its forward method is called, and the model remains in GPU until the next model runs. Memory savings are lower than with enable_sequential_cpu_offload, but performance is much better due to the iterative execution of the unet. enableModelCpuOffload: Offloads all models to CPU using accelerate, reducing memory usage with a low impact on performance. Compared to enable_sequential_cpu_offload, this method moves one whole model at a time to the GPU when its forward method is called, and the model remains in GPU until the next model runs. Memory savings are lower than with enable_sequential_cpu_offload, but performance is much better due to the iterative execution of the unet. |

enableUseChannelsLastMemoryFormat: Channels’ last memory format is an alternative way of ordering NCHW tensors in memory-preserving dimensions ordering. Channels’ last tensors are ordered in such a way that channels become the densest dimension (aka storing images pixel-per-pixel). Since not all operators currently support channels last format it may result in a worst performance, so it’s better to try it and see if it works for your model. enableUseChannelsLastMemoryFormat: Channels’ last memory format is an alternative way of ordering NCHW tensors in memory-preserving dimensions ordering. Channels’ last tensors are ordered in such a way that channels become the densest dimension (aka storing images pixel-per-pixel). Since not all operators currently support channels last format it may result in a worst performance, so it’s better to try it and see if it works for your model. |

memoryEfficientFlashAttention: Experimental: Additional speedup and memory savings (possibly) memoryEfficientFlashAttention: Experimental: Additional speedup and memory savings (possibly) |